The next step of understanding how to build a proper agentic system is to explore how an agent can be extended with tools. Tools are, in my view, the most powerful extension of an LLM as it logically allows it to interact with the world: get additional context, take action. See my older blog post on that topic: https://www.christianmenz.ch/ai/exploring-agentic-ai-from-assistants-to-action-takers/

MCP (Model Context Protocol)

So we have a dozen of frameworks to build agents, all use a slightly different approach to integrate tools. Writing them isn’t super hard, but still it is not easy to use one tool I wrote for CrewAI as is with langgraph. But what if we could write tools once and plug the into all sorts of different agentic frameworks? Say hello to MCP – the “USB port for tools”, as Anthropic calls it. But what is MCP exactly?

MCP (Model Context Protocol) is a protocol that standardizes how tools can be exposed to and invoked by LLM-based agents. It defines a structured, language-agnostic interface that allows tools to be plugged into different agent frameworks – without rewriting them. Think of it as a universal adapter: once a tool speaks MCP, any compatible agent can understand and use it.

In practice that means that I can plug in useful tools directly into my agent and let it start doing things. And the good thing is that we see adoption of the protocol in various frameworks. So does also BeeAI, the framework I’m currently looking into. I hope and expect other frameworks to add support for MCP too.

Connecting MCP with BeeAI

If you do a web search on MCP tools, you will find a ton of reusable tools. For the demo I will use the filesystem tools, basically equipping the agent with tools to navigate, read and write the filesystem and do all sorts of magic.

Although plugging in an MCP tool doesn’t look simple, it actually is quite doable. I think the documentation and examples could be improved to make the getting started experience more fun, but in a nutshell:

- We start the MCP server

- We connect the client

- We tell the agent there is a bunch of tools available at its disposal to fulfill a specific task

The tools

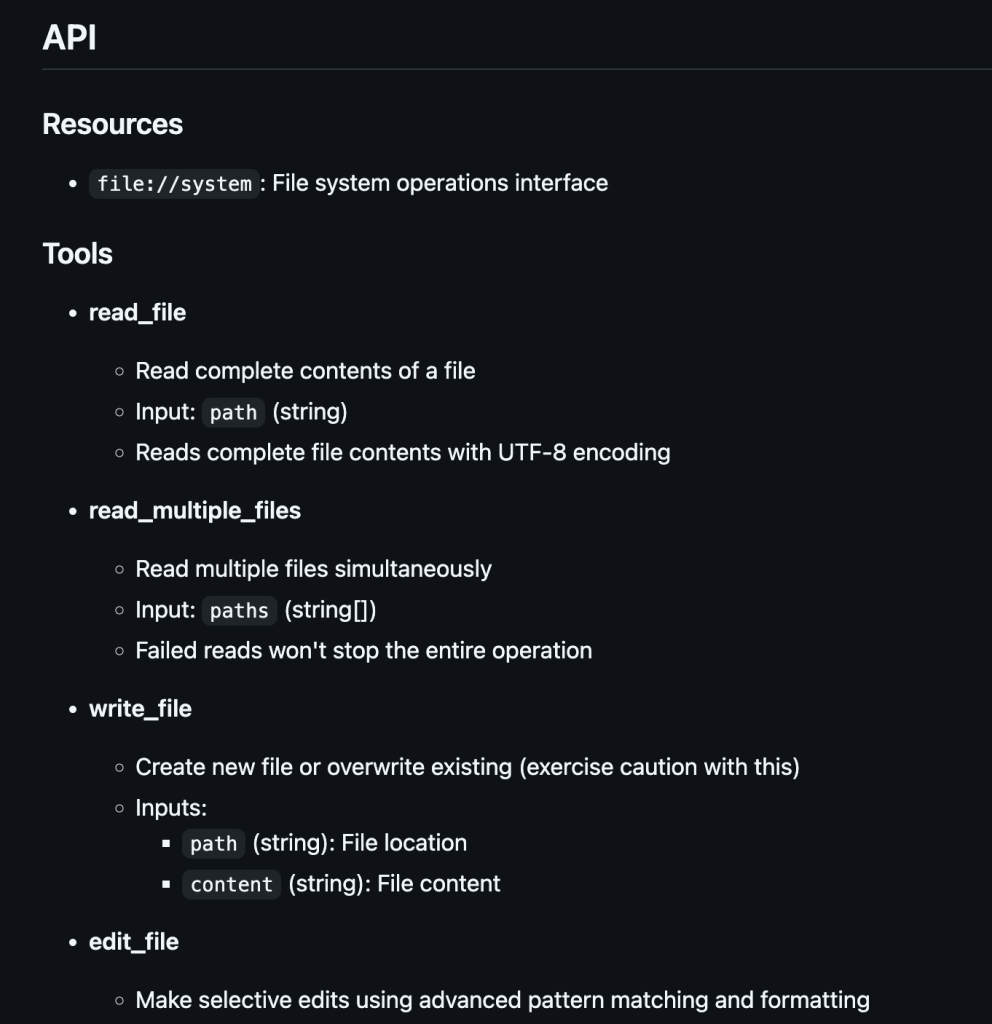

Let’s see how the MCP server is documented first. We can see there is an MCP server with a bunch of features, a list of tools and instructions on how to run them. I’m using Docker, so that section is relevant for me.

Features

Alright, so this MCP server allows me to manage files. Exactly what I was looking for.

Tools

The various tools that we extend our agent with, for example this tools allows the agent to read files from the file system.

Integration

How do I start the MCP server, typically we can start as Docker container or via NPX.

Code

Now how can we integrate that with BeeAI? Let’s explore the code.

Define the MCP server parameters

# Filesystem MCP

fs_params = StdioServerParameters(

command="docker",

args=[

"run", "--rm", "-i",

"-v", f"{os.getcwd()}:/mnt",

"mcp/filesystem",

"/mnt"

]

)Connecting the tools with the agent

Via the MCPTool helper we can load the tools and equip our agent with them. Best practice is to filter the list, to ensure you only provide the tools required for the agent, in this example we just give it all the file system tools as per documentation.

lm = ChatModel.from_name(

"azure_openai:gpt-4.1-mini",

ChatModelParameters(temperature=0),

)

fs_tools = await MCPTool.from_client(session_fs)

tools: list[AnyTool] = fs_tools

agent = ReActAgent(

llm=llm,

tools=tools,

memory=TokenMemory(llm),

)

return agentRunning the agent

Let’s now connect the tool definition and create an actual client session. create the agent and then give it a task.

async with stdio_client(fs_params) as (read_fs, write_fs), \

ClientSession(read_fs, write_fs) as session_fs:

await session_fs.initialize()

agent = await create_agent(session_fs)

prompt = "Read the files in the /tmp directory and create a README file with a summary of the files. "

result = await agent.run(

prompt=prompt,

execution=AgentExecutionConfig(max_retries_per_step=2, total_max_retries=5, max_iterations=10)

)

print("\nAgent answer:\n", result.result.text)Demo

The magic happens now. The agent was equipped with tools to understand and manage the file system and will automagically use them to execute the task given:

% python hello.py

Secure MCP Filesystem Server running on stdio

Allowed directories: [ '/mnt' ]

Agent answer:

Here is a summary for the README file based on the files in the /mnt directory:

# README

## Summary of Files in /mnt Directory

- **.env**: This file contains environment variables including API keys and configuration settings for Azure OpenAI services.

- **hello.py**: This Python script sets up an asynchronous agent using the BeeAI framework. It loads environment variables, creates a ReActAgent with tools for filesystem interaction, and runs a prompt to read files in the /tmp directory and create a README file with a summary of the files.

- **.venv**: This is a directory likely containing a Python virtual environment for managing dependencies.

Conclusion

Reading the first time about MCP it was a bit abstract. Actually building something that uses MCP to extend the agent with interesting and reusable tools feels a bit like just giving the agent a Swiss army knife – and a powerful LLM will know how to use the tools.